- Nvidia (NASDAQ:NVDA) unveiled its next‑generation Rubin AI platform at CES, introducing six codesigned chips and new rack‑scale systems aimed at dramatically accelerating AI training, inference, and agentic reasoning

- Major cloud providers, AI labs, and hardware makers plan to adopt Rubin, positioning it as foundational infrastructure for future large‑scale AI factories and long‑context multimodal models

- The launch comes amid a global AI‑driven chip shortage, where soaring demand for advanced components like HBM and storage devices is straining supply chains—an environment in which Nvidia’s leadership further intensifies demand pressures

- Nvidia stock (NASDAQ:NVDA) opened trading at US$187.97

Nvidia (NASDAQ:NVDA) opened this year’s Consumer Electronics Show with what it called the beginning of the “next generation of AI,” unveiling its new Rubin platform—a sweeping, rack-scale AI supercomputing architecture built around six newly codesigned chips aimed at dramatically accelerating training, inference and agentic AI workloads.

Named in honour of astronomer Vera Rubin, whose pioneering work transformed our understanding of dark matter, the platform represents the most ambitious system-level redesign in Nvidia’s history. According to the company, Rubin will power the world’s next wave of large-scale AI factories and deliver up to 10× lower cost per token compared to its predecessor, the Blackwell generation.

A fully codesigned, six‑chip AI platform

At the heart of Rubin is a coordinated suite of components built to function as a single, tightly optimized AI supercomputer:

- Nvidia Vera CPU — an 88‑core Armv9.2 processor designed for reasoning-heavy agentic AI.

- Nvidia Rubin GPU — featuring 50 petaflops of NVFP4 compute and a 3rd‑generation Transformer Engine.

- Nvidia NVLink 6 Switch — enabling record-breaking GPU‑to‑GPU bandwidth of 3.6TB/s per GPU, with a full rack delivering 260TB/s.

- ConnectX‑9 SuperNIC, BlueField‑4 DPU, and Spectrum‑6 Ethernet Switch — the networking and security backbone enabling multi‑tenant, confidential AI at scale.

This “extreme codesign,” the company said, slashes training times and reduces inference token costs while enabling massive mixture‑of‑experts (MoE) models using four times fewer GPUs.

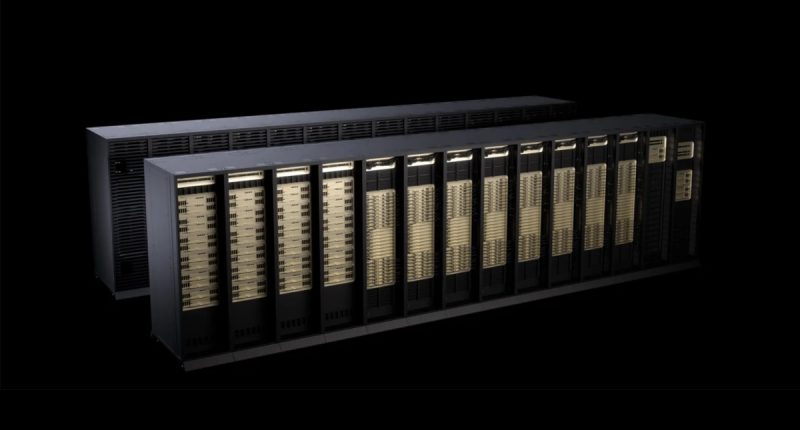

Two key systems: NVL72 and HGX Rubin NVL8

Nvidia introduced two main system configurations designed to meet different deployment needs:

Vera Rubin NVL72

A 72‑GPU, 36‑CPU rack‑scale system integrating NVLink 6, BlueField‑4 and ConnectX‑9.

It is the first rack‑scale platform to offer full Nvidia Confidential Computing across GPU, CPU and NVLink domains—critical for hyperscalers training proprietary, high‑value models.

HGX Rubin NVL8

An eight‑GPU server board designed for use with traditional x86 platforms.

Nvidia expects it to power the next wave of generative AI servers in both enterprise and research environments.

Both systems fit into the company’s DGX SuperPOD reference architecture for scaled-out deployments.

Breakthroughs in networking and AI‑native storage

To keep pace with the escalating bandwidth demands of video generation, long‑context reasoning and MoE models, Nvidia introduced Spectrum‑6 Ethernet, an AI‑tuned networking architecture powered by 200G SerDes, co‑packaged optics and new AI‑optimized fabric technology.

The company also unveiled its Inference Context Memory Storage Platform, powered by BlueField‑4, which allows AI systems to efficiently reuse key‑value cache data—essential for next‑generation, multi‑turn “agentic” applications.

BlueField‑4 additionally introduces a new security paradigm known as ASTRA, giving operators a single trusted control point for managing large-scale, bare‑metal AI clusters.

Broad industry support from cloud providers, AI labs and hardware makers

Nvidia announced sweeping adoption across the AI ecosystem. Among the companies preparing Rubin‑based deployments:

- Cloud providers: AWS, Google Cloud, Microsoft Azure, Oracle Cloud, CoreWeave, Lambda, Nebius and Nscale.

- AI labs: Anthropic, OpenAI, Mistral AI, Cohere, Meta, xAI, Cursor, Perplexity, Runway and others.

- Hardware partners: Cisco, Dell, HPE, Lenovo, Supermicro.

- Storage and infrastructure companies: IBM, NetApp, Pure Storage, Nutanix, VAST Data, Canonical and more.

Microsoft said it will use Rubin NVL72 systems as part of its next‑generation Fairwater AI superfactory sites, and Azure will offer Rubin‑optimized cloud instances for enterprise and research customers.

CoreWeave will begin integrating Rubin in the second half of 2026, touting gains for training, inference and future agentic workloads.

Deepening partnership with Siemens

In a separate announcement, Nvidia and Siemens (XFRA:SIE) revealed a major expansion of their partnership aimed at bringing AI automation to industrial workflows and real‑world physical systems. Nvidia will provide infrastructure, frameworks and simulation models, while Siemens will contribute hundreds of industrial AI experts and deploy the technologies internally before rolling them out to customers.

Availability

Nvidia says Rubin is already in full production, with Rubin‑based systems expected to ship in the second half of 2026 from major OEMs and cloud providers.

With agentic AI and ultra‑large MoE models becoming central to the next phase of the AI race, Nvidia is positioning Rubin as the platform that will anchor a future built on million‑GPU‑scale AI factories.

At the heart of the chip shortage and AI’s place

Despite the excitement surrounding Rubin, Nvidia’s ambitions unfold against the backdrop of a global chip crunch that shows no signs of easing. Explosive demand for AI infrastructure has already strained supplies of memory, storage, and advanced packaging capacity, with analysts warning that components like high‑bandwidth memory (HBM) remain bottlenecked as manufacturers struggle to keep pace. Industry research suggests these shortages could persist into 2026, driven by the same hyperscalers racing to deploy systems like Rubin, which consume vast quantities of cutting‑edge components powered by Nvidia’s GPUs. As a result, Nvidia sits at the center of a feedback loop: its leadership in AI accelerators fuels unprecedented demand, that demand intensifies global supply constraints, and the resulting scarcity reinforces Nvidia’s dominance as customers wait—sometimes for months—for its chips.

About Nvidia

Nvidia Corp. is a full-stack computing infrastructure company.

Nvidia stock (NASDAQ:NVDA) opened trading 0.39 of a per cent higher at US$187.97 and is up more than 33 per cent since this time last year.

Join the discussion: Find out what everybody’s saying about this stock on the Nvidia Bullboard investor discussion forum, and check out the rest of Stockhouse’s stock forums and message boards.

Stockhouse does not provide investment advice or recommendations. All investment decisions should be made based on your own research and consultation with a registered investment professional. The issuer is solely responsible for the accuracy of the information contained herein. For full disclaimer information, please click here.